8. Processor Configuration and Control

This section describes the configuration and control of the processor’s power and performance states. The major controls over the processors are:

Processor power states: C0, C1, C2, C3, … Cn

Processor clock throttling

Processor performance states: P0, P1, … Pn

These controls are used in combination by OSPM to achieve the desired balance of the following sometimes conflicting goals:

Performance

Power consumption and battery life

Thermal requirements

Noise-level requirements

Because the goals interact with each other, the operating software needs to implement a policy as to when and where tradeoffs between the goals are to be made (see note below). For example the operating software would determine when the audible noise of the fan is undesirable and would trade off that requirement for lower thermal requirements, which can lead to lower processing performance. Each processor configuration and control interface is discussed in the following sections along with how controls interacts with the various goals.

Note

A thermal warning leaves room for operating system tradeoffs (to start the fan or reduce performance), without issuing a critical thermal alert.

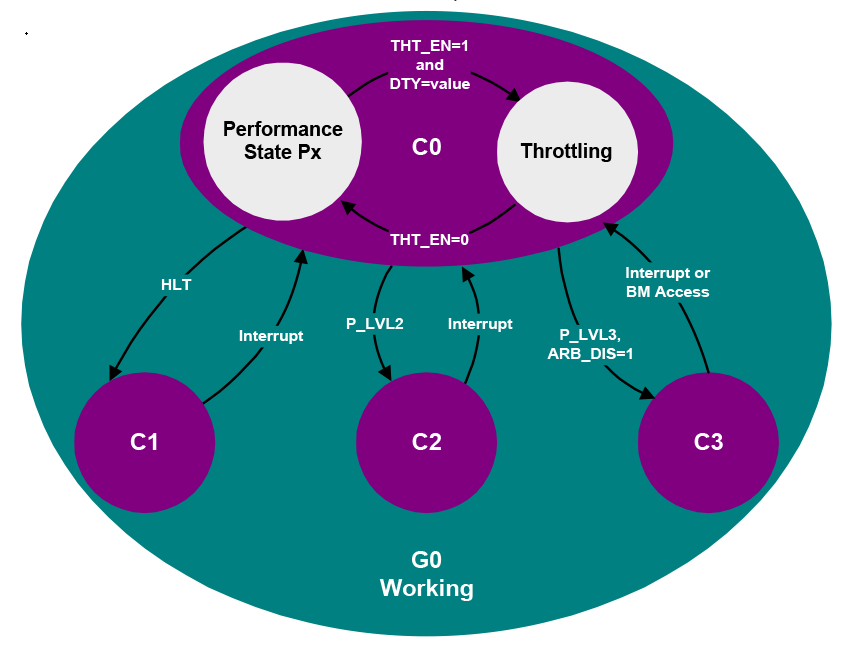

8.1. Processor Power States

ACPI defines the power state of system processors while in the G0 working state as being either active executing or sleeping (not executing) - see note below. Processor power states include are designated C0, C1, C2, C3, …Cn. The C0 power state is an active power state where the CPU executes instructions. The C1 through Cn power states are processor sleeping states where the processor consumes less power and dissipates less heat than leaving the processor in the C0 state. While in a sleeping state, the processor does not execute any instructions. Each processor sleeping state has a latency associated with entering and exiting that corresponds to the power savings. In general, the longer the entry/exit latency, the greater the power savings when in the state. To conserve power, OSPM places the processor into one of its supported sleeping states when idle. While in the C0 state, ACPI allows the performance of the processor to be altered through a defined “throttling” process and through transitions into multiple performance states (P-states). A diagram of processor power states is provided below.

Note

These CPU states map into the G0 working state, and the Cx states only apply to the G0 state. In the G3 sleeping state, the state of the CPU is undefined.

Fig. 8.1 Processor Power States

ACPI defines logic on a per-CPU basis that OSPM uses to transition between the different processor power states. This logic is optional, and is described through the FADT table and processor objects (contained in the hierarchical namespace). The fields and flags within the FADT table describe the symmetrical features of the hardware, and the processor object contains the location for the particular CPU’s clock logic (described by the P_BLK register block and _CST objects).

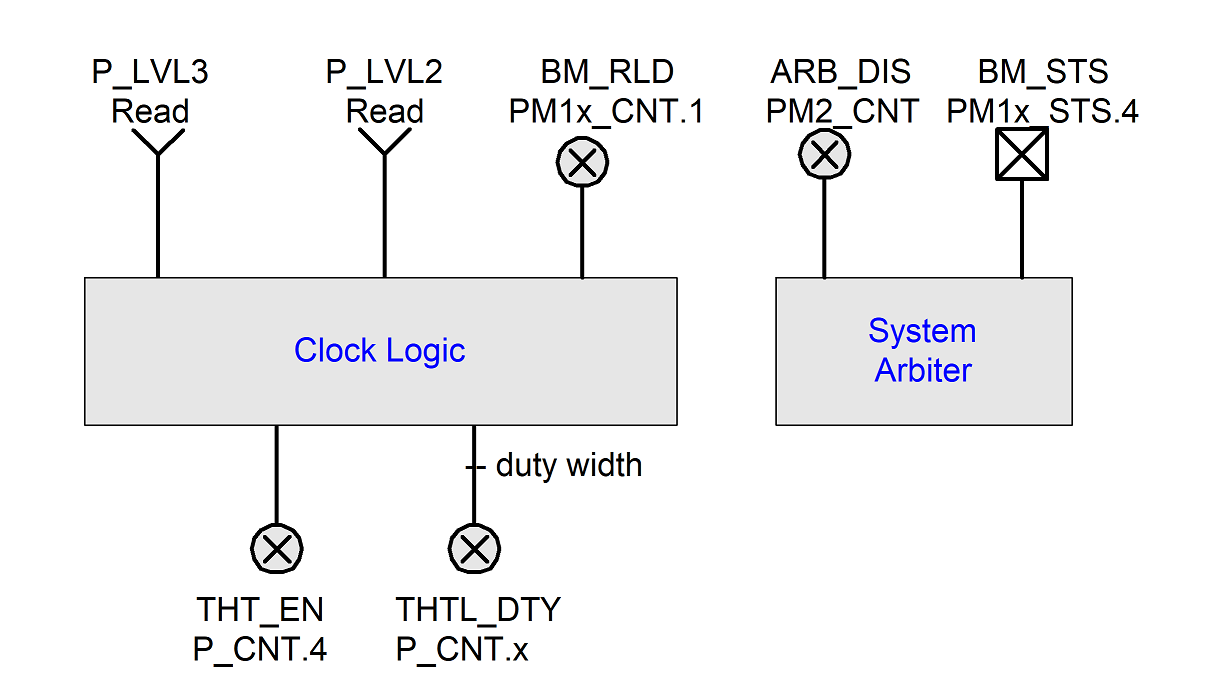

The P_LVL2 and P_LVL3 registers provide optional support for placing the system processors into the C2 or C3 states. The P_LVL2 register is used to sequence the selected processor into the C2 state, and the P_LVL3 register is used to sequence the selected processor into the C3 state. Additional support for the C3 state is provided through the bus master status and arbiter disable bits (BM_STS in the PM1_STS register and ARB_DIS in the PM2_CNT register). System software reads the P_LVL2 or P_LVL3 registers to enter the C2 or C3 power state. The Hardware must put the processor into the proper clock state precisely on the read operation to the appropriate P_LVLx register. The platform may alternatively define interfaces allowing OSPM to enter C-states using the _CST object, which is defined in _CST (C States).

Processor power state support is symmetric when presented via the FADT and P_BLK interfaces; OSPM assumes all processors in a system support the same power states. If processors have non-symmetric power state support, then the platform runtime firmware will choose and use the lowest common power states supported by all the processors in the system through the FADT table. For example, if the CPU0 processor supports all power states up to and including the C3 state, but the CPU1 processor only supports the C1 power state, then OSPM will only place idle processors into the C1 power state (CPU0 will never be put into the C2 or C3 power states). Notice that the C1 power state must be supported. The C2 and C3 power states are optional (see the PROC_C1 flag in the FADT table description in System Description Table Header).

The following sections describe processor power states in detail.

8.1.1. Processor Power State C0

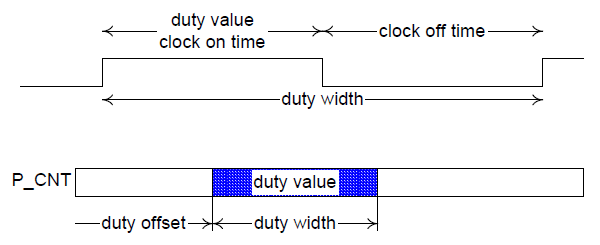

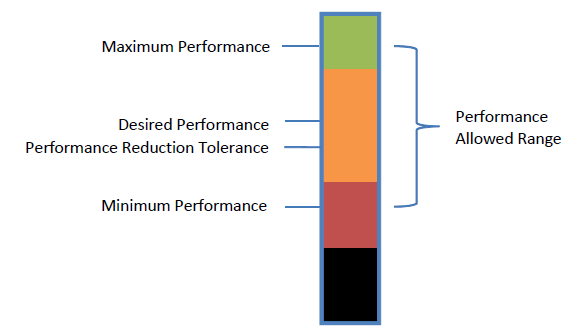

While the processor is in the C0 power state, it executes instructions. While in the C0 power state, OSPM can generate a policy to run the processor at less than maximum performance. The clock throttling mechanism provides OSPM with the functionality to perform this task in addition to thermal control. The mechanism allows OSPM to program a value into a register that reduces the processor’s performance to a percentage of maximum performance.

Fig. 8.2 Throttling Example

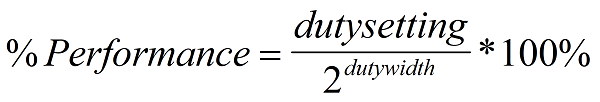

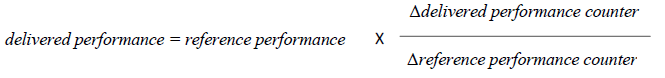

The FADT contains the duty offset and duty width values. The duty offset value determines the offset within the P_CNT register of the duty value. The duty width value determines the number of bits used by the duty value (which determines the granularity of the throttling logic). The performance of the processor by the clock logic can be expressed with the following equation:

Fig. 8.3 Equation 1 Duty Cycle Equation

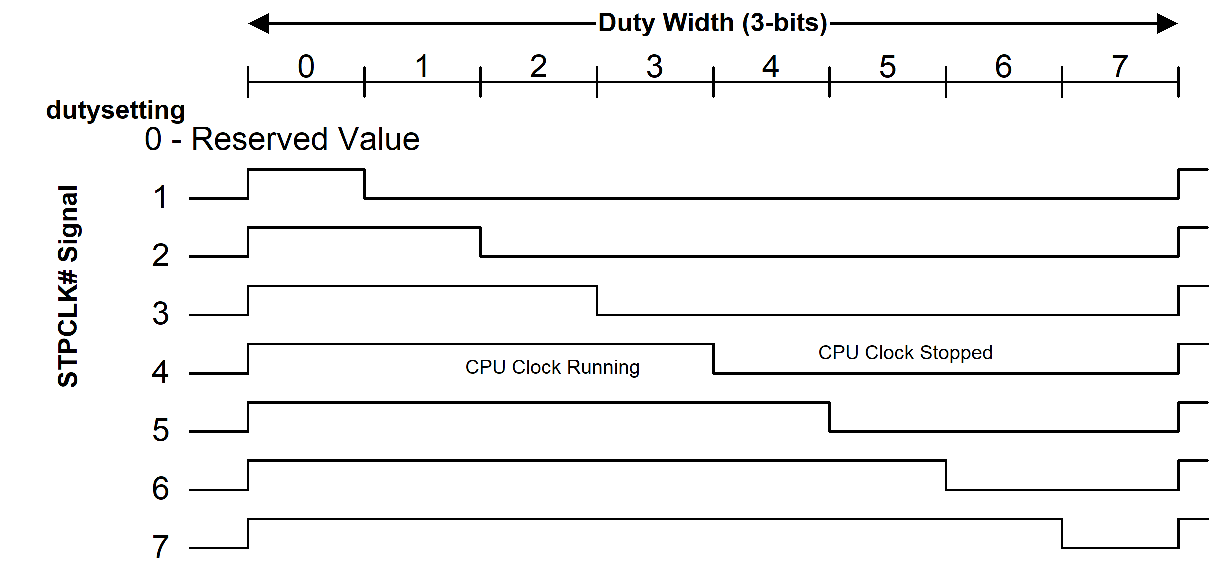

Nominal performance is defined as “close as possible, but not below the indicated performance level.” OSPM will use the duty offset and duty width to determine how to access the duty setting field. OSPM will then program the duty setting based on the thermal condition and desired power of the processor object. OSPM calculates the nominal performance of the processor using the equation expressed in Equation 1. Notice that a dutysetting of zero is reserved.For example, the clock logic could use the stop grant cycle to emulate a divided processor clock frequency on an IA processor (through the use of the STPCLK# signal). This signal internally stops the processor’s clock when asserted LOW. To implement logic that provides eight levels of clock control, the STPCLK# pin could be asserted as follows (to emulate the different frequency settings):

Fig. 8.4 Example Control for the STPCLK

To start the throttling logic OSPM sets the desired duty setting and then sets the THT_EN bit HIGH. To change the duty setting, OSPM will first reset the THT_EN bit LOW, then write another value to the duty setting field while preserving the other unused fields of this register, and then set the THT_EN bit HIGH again.

The example logic model is shown below:

Fig. 8.5 ACPI Clock Logic (One per Processor)

Implementation of the ACPI processor power state controls minimally requires the support a single CPU sleeping state (C1). All of the CPU power states occur in the G0/S0 system state; they have no meaning when the system transitions into the sleeping state(S1-S4). ACPI defines the attributes (semantics) of the different CPU states (defines four of them). It is up to the platform implementation to map an appropriate low-power CPU state to the defined ACPI CPU state.

ACPI clock control is supported through the optional processor register block (P_BLK). ACPI requires that there be a unique processor register block for each CPU in the system. Additionally, ACPI requires that the clock logic for multiprocessor systems be symmetrical when using the P_BLK and FADT interfaces; if the P0 processor supports the C1, C2, and C3 states, but P1 only supports the C1 state, then OSPM will limit all processors to enter the C1 state when idle.

The following sections define the different ACPI CPU sleeping states.

8.1.2. Processor Power State C1

All processors must support this power state. This state is supported through a native instruction of the processor (HLT for IA 32-bit processors), and assumes no hardware support is needed from the chipset. The hardware latency of this state must be low enough that OSPM does not consider the latency aspect of the state when deciding whether to use it. Aside from putting the processor in a power state, this state has no other software-visible effects. In the C1 power state, the processor is able to maintain the context of the system caches.

The hardware can exit this state for any reason, but must always exit this state when an interrupt is to be presented to the processor.

8.1.3. Processor Power State C2

This processor power state is optionally supported by the system. If present, the state offers improved power savings over the C1 state and is entered by using the P_LVL2 command register for the local processor or an alternative mechanism as indicated by the _CST object. The worst-case hardware latency for this state is declared in the FADT and OSPM can use this information to determine when the C1 state should be used instead of the C2 state. Aside from putting the processor in a power state, this state has no other software-visible effects. OSPM assumes the C2 power state has lower power and higher exit latency than the C1 power state.

The C2 power state is an optional ACPI clock state that needs chipset hardware support. This clock logic consists of an interface that can be manipulated to cause the processor complex to precisely transition into a C2 power state. In a C2 power state, the processor is assumed capable of keeping its caches coherent; for example, bus master and multiprocessor activity can take place without corrupting cache context.

The C2 state puts the processor into a low-power state optimized around multiprocessor and bus master systems. OSPM will cause an idle processor complex to enter a C2 state if there are bus masters or Multiple processor activity (which will prevent OSPM from placing the processor complex into the C3 state). The processor complex is able to snoop bus master or multiprocessor CPU accesses to memory while in the C2 state.

The hardware can exit this state for any reason, but must always exit this state whenever an interrupt is to be presented to the processor.

8.1.4. Processor Power State C3

This processor power state is optionally supported by the system. If present, the state offers improved power savings over the C1 and C2 state and is entered by using the P_LVL3 command register for the local processor or an alternative mechanism as indicated by the _CST object. The worst-case hardware latency for this state is declared in the FADT, and OSPM can use this information to determine when the C1 or C2 state should be used instead of the C3 state. While in the C3 state, the processor’s caches maintain state but the processor is not required to snoop bus master or multiprocessor CPU accesses to memory.

The hardware can exit this state for any reason, but must always exit this state when an interrupt is to be presented to the processor or when BM_RLD is set and a bus master is attempting to gain access to memory.

OSPM is responsible for ensuring that the caches maintain coherency. In a uniprocessor environment, this can be done by using the PM2_CNT.ARB_DIS bus master arbitration disable register to ensure bus master cycles do not occur while in the C3 state. In a multiprocessor environment, the processors’ caches can be flushed and invalidated such that no dynamic information remains in the caches before entering the C3 state.

There are two mechanisms for supporting the C3 power state:

Having OSPM flush and invalidate the caches prior to entering the C3 state.

Providing hardware mechanisms to prevent masters from writing to memory (uniprocessor-only support).

In the first case, OSPM will flush the system caches prior to entering the C3 state. As there is normally much latency associated with flushing processor caches, OSPM is likely to only support this in multiprocessor platforms for idle processors. Flushing of the cache is accomplished through one of the defined ACPI mechanisms (described below in Flushing Caches).

In uniprocessor-only platforms that provide the needed hardware functionality (defined in this section), OSPM will attempt to place the platform into a mode that will prevent system bus masters from writing into memory while the processor is in the C3 state. This is accomplished by disabling bus masters prior to entering a C3 power state. Upon a bus master requesting an access, the CPU will awaken from the C3 state and re-enable bus master accesses.

OSPM uses the BM_STS bit to determine the power state to enter when considering a transition to or from the C2/C3 power state. The BM_STS is an optional bit that indicates when bus masters are active. OSPM uses this bit to determine the policy between the C2 and C3 power states: a lot of bus master activity demotes the CPU power state to the C2 (or C1 if C2 is not supported), no bus master activity promotes the CPU power state to the C3 power state. OSPM keeps a running history of the BM_STS bit to determine CPU power state policy.

The last hardware feature used in the C3 power state is the BM_RLD bit. This bit determines if the Cx power state is exited as a result of bus master requests. If set, then the Cx power state is exited upon a request from a bus master. If reset, the power state is not exited upon bus master requests. In the C3 state, bus master requests need to transition the CPU back to the C0 state (as the system is capable of maintaining cache coherency), but such a transition is not needed for the C2 state. OSPM can optionally set this bit when using a C3 power state, and clear it when using a C1 or C2 power state.

8.1.5. Additional Processor Power States

ACPI introduced optional processor power states beyond C3 starting in ACPI 2.0. These power states, C4… Cn, are conveyed to OSPM through the _CST object defined in _CST (C States) These additional power states are characterized by equivalent operational semantics to the C1 through C3 power states, as defined in the previous sections, but with different entry/exit latencies and power savings. See _CST (C States) for more information.

8.2. Flushing Caches

To support the C3 power state without using the ARB_DIS feature, the hardware must provide functionality to flush and invalidate the processors’ caches (for an IA processor, this would be the WBINVD instruction). To support the S1, S2 or S3 sleeping states, the hardware must provide functionality to flush the platform caches. Flushing of caches is supported by one of the following mechanisms:

Processor instruction to write back and invalidate system caches (WBINVD instruction for IA processors).

Processor instruction to write back but not invalidate system caches (WBINVD instruction for IA processors and some chipsets with partial support; that is, they don’t invalidate the caches).

The ACPI specification expects all platforms to support the local CPU instruction for flushing system caches (with support in both the CPU and chipset), and provides some limited “best effort” support for systems that don’t currently meet this capability. The method used by the platform is indicated through the appropriate FADT fields and flags indicated in this section.

ACPI specifies parameters in the FADT that describe the system’s cache capabilities. If the platform properly supports the processor’s write back and invalidate instruction (WBINVD for IA processors), then this support is indicated to OSPM by setting the WBINVD flag in the FADT.

If the platform supports neither of the first two flushing options, then OSPM can attempt to manually flush the cache if it meets the following criteria:

A cache-enabled sequential read of contiguous physical memory of not more than 2 MB will flush the platform caches.

There are two additional FADT fields needed to support manual flushing of the caches:

FLUSH_SIZE, typically twice the size of the largest cache in the system.

FLUSH_STRIDE, typically the smallest cache line size in the system.

8.3. Power, Performance, and Throttling State Dependencies

Cost and complexity trade-off considerations have driven into the platform control dependencies between logical processors when entering power, performance, and throttling states. These dependencies exist in various forms in multi-processor, multi-threaded processor, and multi-core processor-based platforms. These dependencies may also be hierarchical. For example, a multi-processor system consisting of processors containing multiple cores containing multiple threads may have various dependencies as a result of the hardware implementation.

Unless OSPM is aware of the dependency between the logical processors, it might lead to scenarios where one logical processor is implicitly transitioned to a power, performance, or throttling state when it is unwarranted, leading to incorrect / non-optimal system behavior. Given knowledge of the dependencies, OSPM can coordinate the transitions between logical processors, choosing to initiate the transition when doing so does not lead to incorrect or non-optimal system behavior. This OSPM coordination is referred to as Software (SW) Coordination. Alternately, it might be possible for the underlying hardware to coordinate the state transition requests on multiple logical processors, causing the processors to transition to the target state when the transition is guaranteed to not lead to incorrect or non-optimal system behavior. This scenario is referred to as Hardware (HW) coordination. When hardware coordinates transitions, OSPM continues to initiate state transitions as it would if there were no dependencies. However, in this case it is required that hardware provide OSPM with a means to determine actual state residency so that correct / optimal control policy can be realized.

Platforms containing logical processors with cross-processor dependencies in the power, performance, or throttling state control areas use ACPI defined interfaces to group logical processors into what is referred to as a dependency domain. The Coordination Type characteristic for a domain specifies whether OSPM or underlying hardware is responsible for the coordination. When OSPM coordinates, the platform may require that OSPM transition ALL (0xFC) or ANY ONE (0xFD) of the processors belonging to the domain into a particular target state. OSPM may choose at its discretion to perform coordination even though the underlying hardware supports hardware coordination. In this case, OSPM must transition all logical processors in the dependency domain to the particular target state.

Value |

Description |

|---|---|

0xFC |

SW_ALL: The OSPM coordinates the state for all processors in the domain by making the same state request on the control interface of each processor in the domain. ALL refers to the requirement that all processors in the domain must agree on the requested state for the domain to enter that state. |

0xFD |

SW_ANY: The OSPM coordinates the state for all processors in the domain by making a state request on the control interface of only one processor in the domain. ANY refers to the hardware requirement for all processors in the domain to transition to the last requested state on any processor in the domain. |

0xFE |

HW_ALL: As the OSPM requests a state transition on the control interface of any processor in the domain, hardware coordinates the state for all processors in the domain and transitions all processors in the domain to the coordinated state. ALL refers to the requirement for hardware maintaining coordination as OPSM makes independent state requests on any processor in the domain. Unlike SW_ALL, OSPM can make different state requests for processors in the domain, while hardware determines the resulting state for all processors in the domain. Note: The hardware coordination policy is implementation-defined. |

There are no dependencies implied between a processor’s C-states, P-states or T-states. Hence, for example it is possible to use the same dependency domain number for specifying dependencies between P-states among one set of processors and C-states among another set of processors without any dependencies being implied between the P-State transitions on a processor in the first set and C-state transitions on a processor in the second set.

8.4. Declaring Processors

Each processor in the system must be declared in the ACPI namespace in the \_SB scope. A Device definition for a processor is declared using the ACPI0007 hardware identifier (HID). Processor configuration information is provided exclusively by objects in the processor device’s object list.

When the platform uses the APIC interrupt model, UID object values under a processor device are used to associate processor devices with entries in the MADT.

Processor-specific objects may be declared within the processor device’s scope. These objects serve multiple purposes including processor performance state control. Other ACPI-defined device-related objects are also allowed under the processor device’s scope (for example, the unique identifier object _UID mentioned above).

With device-like characteristics attributed to processors, it is implied that a processor device driver will be loaded by OSPM to, at a minimum, process device notifications. OSPM will enumerate processors in the system using the ACPI Namespace, processor-specific native identification instructions, and the _HID method.

For more information on the declaration of the processor device object, see Device (Declare Device Package). Processor-specific child objects are described in the following sections.

ACPI 6.0 introduces the notion of processor containers. Processor containers are declared using the Processor Container Device. A processor container can be used to describe a collection of associated processors that share common resources, such as shared caches, and which have power states that affect the processors in the collection. For more information see Processor Container Device.

8.4.1. Processor Power State Control

ACPI defines multiple processor power state (C state) control interfaces. These are:

The Processor Register Block’s (P_BLK’s) P_LVL2 and P_LVL3 registers coupled with FADT P_LVLx_LAT values and

The _CST object in the processor’s object list.

The _LPI objects for processors and processor containers.

P_BLK based C state controls are described in ACPI Hardware Specification. _CST based C state controls expand the functionality of the P_BLK based controls allowing the number and type of C states to be dynamic and accommodate CPU architecture specific C state entry and exit mechanisms as indicated by registers defined using the Functional Fixed Hardware address space.

_CST is an optional object that provides:

The Processor Register Block’s (P_BLK’s) P_LVL2 and P_LVL3 registers coupled with FADT P_LVLx_LAT values.

The _CST object in the processor’s object list.

ACPI 6.0 introduces _LPI, the low power idle state object. _LPI provides more detailed power state information and can describe idle states at multiple levels of hierarchy in conjunction with Processor Containers. See _LPI (Low Power Idle States) for details.

8.4.1.1. _CST (C States)

_CST is an optional object that provides an alternative method to declare the supported processor power states (C States). Values provided by the _CST object override P_LVLx values in P_BLK and P_LVLx_LAT values in the FADT. The _CST object allows the number of processor power states to be expanded beyond C1, C2, and C3 to an arbitrary number of power states. The entry semantics for these expanded states, (in other words), the considerations for entering these states, are conveyed to OSPM by the C state Type field and correspond to the entry semantics for C1 C2 and C3 as described in Section 8.1.2 through Section 8.1.4. _CST defines ascending C-states characterized by lower power and higher entry/exit latency.

Arguments:

None

Return Value:

A variable-length Package containing a list of C-state information Packages as described below

Return Value Information

_CST returns a variable-length Package that contains the following elements:

Count An Integer that contains the number of CState sub-packages that follow

CStates[] A list of Count CState sub-packages

Package {

Count // Integer

CStates[0] // Package

...

CStates[Count-1] // Package

}

Each fixed-length Cstate sub-Package contains the elements described below:

Package {

Register // Buffer (Resource Descriptor)

Type // Integer (BYTE)

Latency // Integer (WORD)

Power // Integer (DWORD)

}

Element |

Object Type |

Description |

|---|---|---|

Register |

Buffer |

Contains a Resource Descriptor with a single Register() descriptor that describes the register that OSPM must read to place the processor in the corresponding C state. |

Type |

Integer (BYTE) |

The C State type (1=C1, 2=C2, 3=C3). This field conveys the semantics to be used by OSPM when entering/exiting the C state. Zero is not a valid value. |

Latency |

Integer (WORD) |

The worst-case latency to enter and exit the C State (in microseconds). There are no latency restrictions. |

Power |

Integer (DWORD) |

The average power consumption of the processor when in the corresponding C State (in milliwatts). |

The platform must expose a _CST object for either all or none of its processors. If the _CST object exists, OSPM uses the C state information specified in the _CST object in lieu of P_LVL2 and P_LVL3 registers defined in P_BLK and the P_LVLx_LAT values defined in the FADT. Also notice that if the _CST object exists and the _PTC object does not exist, OSPM will use the Processor Control Register defined in P_BLK and the C_State_Register registers in the _CST object.

The platform may change the number or type of C States available for OSPM use dynamically by issuing a Notify event on the processor object with a notification value of 0x81. This will cause OSPM to re-evaluate any _CST object residing under the processor object notified. For example, the platform might notify OSPM that the number of supported C States has changed as a result of an asynchronous AC insertion / removal event.

The platform must specify unique C_State_Register addresses for all entries within a given _CST object.

_CST eliminates the ACPI 1.0 restriction that all processors must have C State parity. With _CST, each processor can have its own characteristics independent of other processors. For example, processor 0 can support C1, C2 and C3, while processor 1 supports only C1.

The fields in the processor structure remain for backward compatibility.

Example

Processor (

\_SB.CPU0, // Processor Name

1, // ACPI Processor number

0x120, // PBlk system IO address

6 // PBlkLen

)

{

Name(_CST, Package()

{

4, // There are four C-states defined here with three semantics

// The third and fourth C-states defined have the same C3 entry semantics

Package(){ResourceTemplate(){Register(FFixedHW, 0, 0, 0)}, 1, 20, 1000},

Package(){ResourceTemplate(){Register(SystemIO, 8, 0, 0x161)}, 2, 40, 750},

Package(){ResourceTemplate(){Register(SystemIO, 8, 0, 0x162)}, 3, 60, 500},

Package(){ResourceTemplate(){Register(SystemIO, 8, 0, 0x163)}, 3, 100, 250}

}

)

}

Notice in the example above that OSPM should anticipate the possibility of a _CST object providing more than one entry with the same C_State_Type value. In this case OSPM must decide which C_State_Register it will use to enter that C state.

Example

This is an example usage of the _CST object using the typical values as defined in ACPI 1.0.

Processor (

\\_SB.CPU0, // Processor Name

1, // ACPI Processor number

0x120, // PBLK system IO address

6 ) // PBLK Len

{

Name(_CST, Package()

{

2, // There are two C-states defined here - C2 and C3

Package(){ResourceTemplate(){Register(SystemIO, 8, 0, 0x124)}, 2, 2, 750},

Package(){ResourceTemplate(){Register(SystemIO, 8, 0, 0x125)}, 3, 65, 500}

})

}

The platform will issue a Notify (_SB.CPU0, 0x81) to inform OSPM to re-evaluate this object when the number of available processor power states changes.

8.4.1.2. _CSD (C-State Dependency)

This optional object provides C-state control cross logical processor dependency information to OSPM. The _CSD object evaluates to a packaged list of information that correlates with the C-state information returned by the _CST object. Each packaged list entry identifies the C-state for which the dependency is being specified (as an index into the _CST object list), a dependency domain number for that C-state, the coordination type for that C-state and the number of logical processors belonging to the domain for the particular C-state. It is possible that a particular C-state may belong to multiple domains. That is, it is possible to have multiple entries in the _CSD list with the same CStateIndex value.

Arguments:

None

Return Value:

A variable-length Package containing a list of C-state dependency Packages as described below.

Return Value Information

Package {

CStateDependency[0] // Package

...

CStateDependency[n] // Package

}

Each CstateDependency sub-Package contains the elements described below:

Package {

NumEntries // Integer

Revision // Integer (BYTE)

Domain // Integer (DWORD)

CoordType // Integer (DWORD)

NumProcessors // Integer (DWORD)

Index // Integer (DWORD)

}

Element |

Object Type |

Description |

|---|---|---|

NumEntries |

Integer |

The number of entries in the CStateDependency package including this field. Current value is 6. |

Revision |

Integer (BYTE) |

The revision number of the CStateDependency package format. Current value is 0. |

Domain |

Integer (DWORD) |

The dependency domain number to which this C state entry belongs. |

CoordType |

Integer (DWORD) |

See Table 8.1 for supported C-state coordination types. |

Num Processors |

Integer (DWORD) |

The number of processors belonging to the domain for the particular C-state. OSPM will not start performing power state transitions to a particular C-state until this number of processors belonging to the same domain for the particular C-state have been detected and started. |

Index |

Integer (DWORD) |

Indicates the index of the C-State entry in the _CST object for which the dependency applies. |

Given that the number or type of available C States may change dynamically, ACPI supports Notify events on the processor object, with Notify events of type 0x81 causing OSPM to re-evaluate any _CST objects residing under the particular processor object notified. On receipt of Notify events of type 0x81, OSPM should re-evaluate any present _CSD objects also.

Example

This is an example usage of the _CSD structure in a Processor structure in the namespace. The example represents a two processor configuration. The C1-type state can be independently entered on each processor. For the C2-type state, there exists dependence between the two processors, such that one processor transitioning to the C2-type state, causes the other processor to transition to the C2-type state. A similar dependence exists for the C3-type state. OSPM will be required to coordinate the C2 and C3 transitions between the two processors. Also OSPM can initiate a transition on either processor to cause both to transition to the common target C-state.

Processor (

\_SB.CPU0, // Processor Name

1, // ACPI Processor number

0x120, // PBlk system IO address

6 ) // PBlkLen

{

Name (_CST, Package()

{

3, // There are three C-states defined here with three semantics

Package(){ResourceTemplate(){Register(FFixedHW, 0, 0, 0)}, 1, 20,1000},

Package(){ResourceTemplate(){Register(SystemIO, 8, 0, 0x161)}, 2, 40, 750},

Package(){ResourceTemplate(){Register(SystemIO, 8, 0, 0x162)}, 3, 60, 500}

})

Name(_CSD, Package()

{

Package(){6, 0, 0, 0xFD, 2, 1} , // 6 entries,Revision 0,Domain 0,OSPM Coordinate

// Initiate on Any Proc,2 Procs, Index 1 (C2-type)

Package(){6, 0, 0, 0xFD, 2, 2} // 6 entries,Revision 0 Domain 0,OSPM Coordinate

// Initiate on Any Proc,2 Procs, Index 2 (C3-type)

})

}

Processor (

\_SB.CPU1, // Processor Name

2, // ACPI Processor number

, // PBlk system IO address

) // PBlkLen

{

Name(_CST, Package()

{

3, // There are three C-states defined here with three semantics

Package(){ResourceTemplate(){Register(FFixedHW, 0, 0, 0)}, 1, 20, 1000},

Package(){ResourceTemplate(){Register(SystemIO, 8, 0, 0x161)}, 2, 40, 750},

Package(){ResourceTemplate(){Register(SystemIO, 8, 0, 0x162)}, 3, 60, 500}

})

Name(_CSD, Package()

{

Package(){6, 0, 0, 0xFD, 2, 1}, // 6 entries,Revision 0,Domain 0,OSPM Coordinate

// Initiate on any Proc,2 Procs, Index 1 (C2-type)

Package(){6, 0, 0, 0xFD, 2, 2} // 6 entries,Revision 0,Domain 0,OSPM Coordinate

// Initiate on any Proc,2 Procs,Index 2 (C3-type)

})

}

When the platform issues a Notify (\_SB.CPU0, 0x81) to inform OSPM to re-evaluate _CST when the number of available processor power states changes, OSPM should also evaluate _CSD.

8.4.2. Processor Hierarchy

It is very typical for computing platforms to have a multitude of processors that share common resources, such as caches, and which have common power states that affect groups of processors. These are arranged in a hierarchical manner. For example, a system may contain a set of NUMA nodes, each with a number of sockets, which may contain multiple groups of processors, each of which may contain individual processor cores, each of which may contain multiple hardware threads. Different architectures use different terminology to denominate logically associated processors, but terms such as package, cluster, module, and socket are typical examples. ACPI uses the term processor container to describe a group of associated processors. Processors are said to belong to a container if they are associated in some way, such as a shared cache or a low power mode which affects them all.

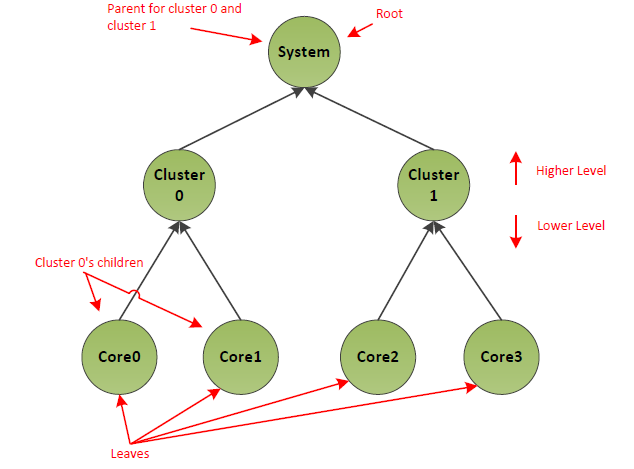

Fig. 8.6 Processor Hierarchy

The figure above depicts an example system, which comprises a system level processor container, which in turn contains two cluster processor containers, each of which contains two processors. The overall collection is called the processor hierarchy and standard tree terminology is used to refer to different parts of it. For example, an individual processor or container is called a node, the nodes which reside within a processor container are called children of that parent, etc. This example is symmetric but that is not a requirement. For example, a system may contain a different number of processors in different containers or an asymmetric hierarchy where one side of the topology tree is deeper than another. Also note that while this example includes a single top level processor container encompassing all processors, this is not a requirement. It is legal for a system to be described using a collection of trees. (See Note below)

Note

The processor hierarchy can be used to describe a number of different characteristics of system topology. The main example is shared power states, see the Low Power Idle states in Lower Power Idle States for details.

8.4.2.1. Processor Container Device

This optional device is a container object that acts much like a bus node in a namespace. It may contain child objects that are either processor devices or other processor containers. This allows representing hierarchical processor topologies. Each processor container or processor in the hierarchy is herein referred to as a node. The processor container device is declared using the hardware identifier (_HID) ACPI0010.

To aid support of operating systems which do not parse processor containers, a container can carry a Compatible ID (_CID) of PNP0A05, which represents a generic container device (see Device Class-Specific Objects)

A processor container declaration must supply a _UID method returning an ID that is unique in the processor container hierarchy. A processor container must contain either other processor containers or other processor devices declared within its scope. In addition, a processor container may also contain the following methods in its scope:

Object |

Description |

|---|---|

_LPI |

Declares local power states for the hierarchy node represented by the processor container |

_RDI |

Declares power resource dependencies that affect system level power states |

_STA |

Determines the status of a processor container. See Device Class-Specific Objects. |

_LPI may be present under a processor device, and is described in _LPI (Low Power Idle States). RDI can only be present under a singular top level processor container object, and is described below.

ACPI allows the definition of more than one root level processor container. In other words, it is possible to define multiple top level containers. For example, in a NUMA system if there are no idle states or other objects that need to be encapsulated at the system level, multiple NUMA-node level processor containers may be defined at the top level of the hierarchy.

Processor Container Device objects are only valid for implementations conforming to ACPI 6.0 or higher. A platform can ascertain whether an operating system supports parsing of processor container objects via the _OSC method (see Platform-Wide OSPM Capabilities).

8.4.3. Lower Power Idle States

ACPI 6.0 introduces Lower Power Idle states (LPI). This extends the specification to allow expression of idle states that, like C-states, are selected by the OSPM when a processor goes idle, but which may affect more than one processor, and may affect other system components. LPI extensions in the specification leverage the processor container device, and in this way can express which parts of the system are affected by a given LPI state.

LPI states are defined via the following objects:

_LPI objects define the states themselves, and may be declared inside a processor or a processor container device

_RDI allows expressing constraints on LPI usage borne out of device usage

8.4.3.1. Hierarchical Idle States

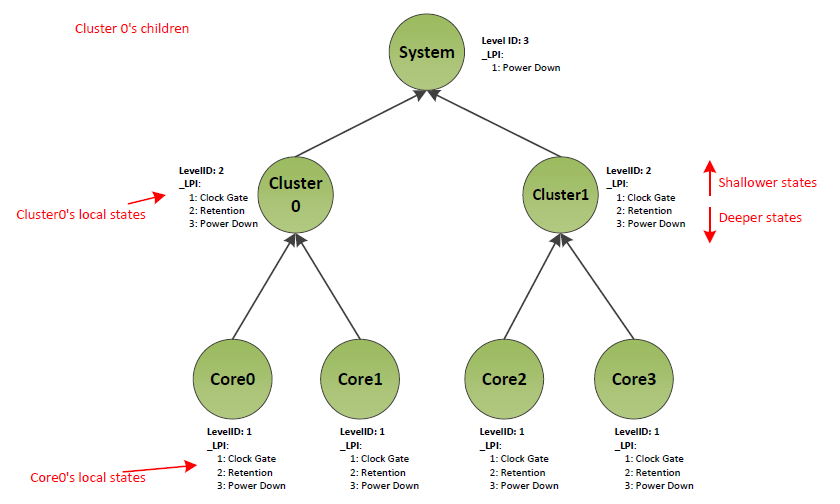

Processor containers (Processor Container Device) can be used in conjunction with _LPI (_LPI (Low Power Idle States)) to describe idle states in a hierarchical manner. Within the processor hierarchy, each node has low power states that are specific to that node. ACPI refers to states that are specific to a node in the hierarchy as Local Power States. For example in the system depicted in Power states for processor hierarchy, the local power states of CPU0 are clock gate, retention and power down.

When the OS running on a given processor detects there is no more work to schedule on that processor, it needs to select an idle state. The state may affect more than just that processor. A processor going idle could be the last one in the system, or in a processor container, and therefore may select a power state what affects multiple processors. In order to select such a state, the OS needs to choose a local power state for each affected level in the processor hierarchy.

Fig. 8.7 Power states for processor hierarchy

Consider a situation where Core 0 is the last active core depicted in the example system, Power states for processor hierarchy. It may put the system into the lowest possible idle state. To do so, the OS chooses local state 3 (Power Down) for Core0, local state 3 (Power Down) for Cluster0, and local state 1 (Power Down) for the system. However, most HW architectures only support a single power state request from the OS to the platform. That is, it is not possible to make a separate local power state request per hierarchy node to the platform. Therefore, the OS must combine the per level local power states into a single Composite power state. The platform then acts on the Composite power state request.

A platform can only support a limited set of Composite power states, and not every combination of Local Power states across levels is valid. The valid power states in our example system are depicted in the following table.

System Level Processor Container |

Cluster level Processor Container |

Processor |

|---|---|---|

Running |

Running |

Clock Gated |

Running |

Running |

Retention |

Running |

Running |

Power Down |

Running |

Clock Gated |

Clock Gated |

Running |

Clock Gated |

Retention |

Running |

Clock Gated |

Power Down |

Running |

Retention |

Retention |

Running |

Retention |

Power Down |

Running |

Power Down |

Power Down |

Power Down |

Power Down |

Power Down |

8.4.3.2. Idle State Coordination

With hierarchical idle states, multiple processors affect the idle state for any non-leaf hierarchy node. Taking our example system in Power states for processor hierarchy, for cluster 0 to enter a low power state, both Core 0 and Core 1 must be idle. In addition, the power state selection done for Core 0 and Core 1 as they go idle has bearing on the state that can be used for Cluster 0. This requires coordination of idle state requests between the two processors. ACPI supports two different coordination schemes (detailed in subsections following):

Platform coordinated

OS initiated.

The OS and the platform can handshake on support for OS Initiated Idle or Platform Coordinated Idle using the _OSC method as described in Platform-Wide OSPM Capabilities. Note that an Architecture specific command may be required to enter OS Initiated mode, in which case please refer to architecture specific documentation. (For PSCI documentation see http://uefi.org/acpi under the heading “PSCI Specification”; for ARM FFH documentation, see http://uefi.org/acpi under the heading “ARM FFH Specification”.)

8.4.3.2.1. Platform Coordinated

With the Platform Coordinated scheme, the platform is responsible for coordination of idle states across processors. OSPM makes a request for all levels of hierarchy from each processor meaning that each processor makes a vote by requesting a local power state for itself, its parent, its parent’s parent, etc. (In some cases, the vote for a particular hierarchy level may be implicit - see the autopromotion discussion below for more details). When choosing idle states at higher levels, the OSPM on a processor may opt to keep a higher level node in a running state - this is still a vote for that node which the platform must respect. The vote expressed by the OSPM sets out the constraints on the local power state that the platform may choose for processor, and any parent nodes affected by the vote. In particular the vote expresses that the platform must not enter:

A deeper (lower power) local state than the requested one.

A local power state with a higher wake up latency than the requested one.

A local power state with power resource dependencies that the requested state does not have.

The platform looks across the votes for each hierarchy node from all underlying cores and chooses the deepest local state which satisfies all of the constraints associated with all of the votes. Normally, this just means taking the shallowest state that one of the cores voted for, since shallower states have lower wakeup latencies, lower minimum residencies, and fewer power resource dependencies. However, this may not always be the true, as state depth and latencies do not always increase together. For the sake of efficiency, the platform should generally not enter a power state with a higher minimum residency than the requested one. However, this is not a strict functional requirement. The platform may resolve to a state with higher minimum residency if it believes that is the most efficient choice based on the specific states and circumstances.

Using the above example in Power states for processor hierarchy, a simple flow would look like this:

Core0 goes idle - OS requests Core0 Power Down, Cluster0 Retention

Platform receives Core0 requests - place Core0 in the Power Down state

Core1 goes idle - OS requests Core1 Power Down, Cluster0 Power Down

Platform receives Core1 request - puts Core1 in the Power Down state, and takes shallowest vote for Cluster0, thus placing it into the Retention state

If the OSPM wanted to request power states beyond the cluster level, then Core0 and Core1 would both vote for an idle state at System level too, and the platform would resolve the final state selection across their votes and votes from any other processors under the System hierarchy via the method described above.

As mentioned above, certain platforms support a mechanism called autopromotion where the votes for higher level states may be implicit rather than explicit. In this scheme, the platform provides OSPM with commands to request idle states at a lower level of the processor hierarchy which automatically imply a specific idle state request at the respective higher level of the hierarchy. There is no command to explicitly request entry into the higher level state, only the implicit request based on the lower level state.

For example, if the platform illustrated in Power states for processor hierarchy uses autopromotion for the Cluster0 Clock Gated state, neither Core0 nor Core1 can explicitly request it. However, a core level Clock Gate request from either Core0 or Core1 would imply a Cluster0 Clock Gate request. Therefore, if both cores request core clock gating (or deeper), Cluster0 will be clock gated automatically by the platform. Additional details on how autopromotion is supported by ACPI can be found in Entry Method and Composition.

8.4.3.2.2. OS Initiated

In the OS Initiated coordination scheme, OSPM only requests an idle state for a particular hierarchy node when the last underlying processor goes to sleep. Obviously a processor always selects an idle state for itself, but idle states for higher level hierarchy nodes like clusters are only selected when the last processor in the cluster goes idle. The platform only considers the most recent request for a particular node when deciding on its idle state.

The main motivations for OS Initiated coordination are:

Avoid overhead of OSPM evaluating selection for higher level idle states which will not be used since other processors are still awake

Allow OSPM to make higher level idle state selections based on the latest information by taking only the most recent request for a particular node and ignoring requests from processors which went to sleep in the past (and may have been based on information which is now stale)

Using the above example in a simple flow would look like the following.

Step |

OS View of power states |

Platform view of power states |

|

|---|---|---|---|

0: |

Cores 0 and 1 are both awake and running code |

Core0: Running Core1: Running Cluster0: Running |

Core0: Running Core1: Running Cluster0: Running |

1 |

OS on Core0 requests Core0 PowerDown |

Core0: PowerDown Core1: Running Cluster0: Running |

Core0: Running Core1: Running Cluster0: Running |

2 |

Platform observes request and places Core0 into power down |

Core0: PowerDown Core1: Running Cluster0: Running |

Core0: PowerDown Core1: Running Cluster0: Running |

3 |

OS on Core1 requests Core1 PowerDown and Cluster0 PowerDown |

Core0: PowerDown Core1: PowerDown Cluster0: PowerDown |

Core0: PowerDown Core1: Running Cluster0: Running |

4 |

Platform observes requests for Core1 and Cluster0 and processes them |

Core0: PowerDown Core1: PowerDown Cluster0: PowerDown |

Core0: PowerDown Core1: PowerDown Cluster0: PowerDown |

Note that Core1 is making a cluster decision which affects both Core0 and Core1 so OSPM should consider expected sleep duration, wake up latency requirements, device dependencies, etc. for both cores and not just Core1 when requesting the cluster state.

The platform is still responsible for ensuring functional correctness. For example, if Core0 wakes back up, the cluster state requested by Core1 in the above example should be exited or the entry into the state should be aborted. OSPM has no responsibility to guarantee that the last core down is also the first core up, or that a core does not wake up just as another is requesting a higher level sleep state.

8.4.3.2.2.1. OS Initiated Request Semantics

With OS Initiated coordination, the ordering of requests from different cores is critically important since the platform acts upon the latest one. If the platform does not process requests in the order the OS intended then it may put the platform into the wrong state. Consider this scenario in our example system in Power states for processor hierarchy, as shown in the following table.

Step |

OS View of power states |

Platform view of power states |

|

|---|---|---|---|

0: |

Core0 in PowerDown, and Core1 is running |

Core0: PowerDown Core1: Running Cluster0: Running |

Core0: PowerDown Core1: Running Cluster0: Running |

1 |

Core1 goes idle – the OSPM requests Core1 PowerDown and Cluster0 Retention |

Core0: PowerDown Core1: PowerDown Cluster0: Retention |

Core0: PowerDown Core1: Running Cluster0: Running |

2 |

Core0 receives an interrupt and wakes up into platform |

Core0: PowerDown Core1: PowerDown Cluster0: Retention |

Core0: Running Core1: Running Cluster0: Running |

3 |

Core0 moves into OSPM and starts processing interrupt |

Core0: Running Core1: PowerDown Cluster0: Running |

Core0: Running Core1: Running Cluster0: Running |

4 |

Core0 goes idle and OSPM request Core0 Power Down, Cluster0 Power Down |

Core0: PowerDown Core1: PowerDown Cluster0: PowerDown |

Core0: Running Core1: Running Cluster0: Running |

5 |

Core0’s idle request “passes” Core1’s request. Platform puts Core0 to Power Down but ignores cluster request since Core1 is still running |

Core0: PowerDown Core1: PowerDown Cluster0: PowerDown |

Core0: PowerDown Core1: Running Cluster0: Running |

6 |

Core1’s request is observed by the platform. Platform puts Core1 to Power Down and Cluster0 to retention. |

Core0: PowerDown Core1: PowerDown Cluster0: PowerDown!! (See Note below) |

Core0: PowerDown Core1: PowerDown Cluster0: Retention!! (See Note below) |

Note

In the last row of the table above, the Cluster0 values are mismatched.

The key issue here is the race condition between the requests from the two cores; there is no guarantee that they reach the platform in the same order the OS made them. It is not expected to be common, but Core0’s request could “pass” Core1’s for a variety of potential reasons - lower frequency, different cache behavior, handling of some non-OS visible event, etc. This sequence of events results in the platform incorrectly acting on the stale Cluster0 request from Core1 rather than the latest request from Core0. The net result is that Cluster0 is left in the wrong state until the next wakeup.

To address such race conditions and ensure that the platform and OS have a consistent view of the request ordering, OS Initiated idle state request semantics are enhanced to include a hierarchical dependency check. When the platform receives a request, it is responsible for checking whether the requesting core is really the last core down in the requested domain and rejecting the request if not. Note that even if OSPM and the platform are behaving correctly, they may not always agree on the state of the system due to various races. For example, the platform may see a core waking up before OSPM, and therefore see that core as running, whilst the OSPM still sees it as sleeping. The platform can start treating a particular core as being in a low power state, for the sake of the dependency check, once it has seen the core’s request (so that it can be correctly ordered versus other OS requests). The platform must start treating a core as running before returning control to the OS after it wakes up from an idle state.

With this dependency check, the above example would change as follows:

Step: |

OS View of power states |

Platform view of power states |

|

|---|---|---|---|

0-4: |

Same as above |

Core0: PowerDown Core1: PowerDown Cluster0: PowerDown |

Core0: Running Core1: Running Cluster0: Running |

5 |

Core0’s idle request “passes” Core1’s request. Platform rejects Core0’s request since it includes Cluster0 but Core1 is still awake. |

Core0: PowerDown Core1: PowerDown Cluster0: PowerDown |

Core0: Running Core1: Running Cluster0: Running |

6 |

Core1’s request is observed by the platform. Platform rejects Core1’s request since it includes Cluster0 but Core0 is still awake. |

Core0: PowerDown Core1: PowerDown Cluster0: PowerDown |

Core0: Running Core1: Running Cluster0: Running |

7 |

OS resumes on Core0 |

Core0: Running Core1: PowerDown Cluster0: Running |

Core0: Running Core1: Running Cluster0: Running |

8 |

OS resumes on Core1 |

Core0: Running Core1: Running Cluster0: Running |

Core0: Running Core1: Running Cluster0: Running |

Once control is returned to the OS, it can handle as it sees fit - likely just re-evaluating the idle state on both cores. When requests are received out of order, some overhead is introduced by rejecting the command and forcing the OS to re-evaluate, but this is expected to be rare. Requests sent by the OS should be seen by the platform in the same order the vast majority of the time, and in this case, the idle command will proceed as normal.

It is possible that the OS may choose to keep a particular hierarchy node running even if all CPUs underneath it are asleep. This gives rise to another potential corner case - see below.

Step |

OS View of power states |

Platform view of power states |

|

|---|---|---|---|

0: |

Core0 in PowerDown, and Core1 is running |

Core0: PowerDown Core1: Running Cluster0: Running |

Core0: PowerDown Core1: Running Cluster0: Running |

1 |

Core1 goes idle – the OSPM OS requests Core1 PowerDown and Cluster0 Retention |

Core0: PowerDown Core1: PowerDown Cluster0: Retention |

Core0: PowerDown Core1: Running Cluster0: Running |

2 |

Core0 receives an interrupt and wakes up into platform |

Core0: PowerDown Core1: PowerDown Cluster0: Retention |

Core0: Running Core1: Running Cluster0: Running |

3 |

Core0 moves into OSPM and starts processing interrupt |

Core0: Running Core1: PowerDown Cluster0: Running |

Core0: Running Core1: Running Cluster0: Running |

4 |

Core0 goes idle and OSPM request Core0 Power Down and requests Cluster0 to stay running |

Core0: PowerDown Core1: PowerDown Cluster0: Running |

Core0: Running Core1: Running Cluster0: Running |

5 |

Core0’s idle request “passes” Core1’s request. Platform puts Core0 to PowerDown. Even though the OS made a request for the cluster to run, Platform does not know to reject Core0’s request since it doesn’t include a Cluster idle state |

Core0: PowerDown Core1: PowerDown Cluster0: Running |

Core0: PowerDown Core1: Running Cluster0: Running |

6 |

Core1’s request is observed by the platform. Platform puts Core1 to Power Down and Cluster0 to retention. |

Core0: PowerDown Core1: PowerDown Cluster0: Running!! (See Note, below) |

Core0: PowerDown Core1: PowerDown Cluster0: Retention!! (See Note below) |

Note

In the last row of the table above, the Cluster0 values are mismatched.

The fundamental issue is that the platform cannot infer what hierarchy level a request is for, based on what levels are being placed into a low power mode. To mitigate this, each idle state command must include a hierarchy parameter specifying the highest level hierarchy node for which the OS is making a request in addition to the normal idle state identifier. Even if the OS does not want some higher level hierarchy node to enter an idle state, it should indicate if the core is the last core down for that node. This allows the platform to understand the OS’s view of the state of the hierarchy and ensure ordering of requests even if the OS requests a particular node to stay running.

This enhancement is illustrated in the following table.

Step |

OS View of power states |

Platform view of power states |

|

|---|---|---|---|

0: |

Core0 in PowerDown, and Core1 is running |

Core0: PowerDown Core1: Running Cluster0: Running |

Core0: PowerDown Core1: Running Cluster0: Running |

1 |

Core1 goes idle – the OSPM OS requests Core1 PowerDown and Cluster0 Retention and identifies itself as last down in Cluster0 |

Core0: PowerDown Core1: PowerDown Cluster0: Retention |

Core0: PowerDown Core1: Running Cluster0: Running |

2 |

Core0 receives an interrupt and wakes up into platform |

Core0: PowerDown Core1: PowerDown Cluster0: Retention |

Core0: Running Core1: Running Cluster0: Running |

3 |

Core0 moves into OSPM and starts processing interrupt |

Core0: Running Core1: PowerDown Cluster0: Running |

Core0: Running Core1: Running Cluster0: Running |

4 |

Core0 goes idle and OSPM request Core0 Power Down and requests Cluster0 to stay running and identifies itself as last down in Cluster0 |

Core0: PowerDown Core1: PowerDown Cluster0: Running |

Core0: Running Core1: Running Cluster0: Running |

5 |

Core0’s idle request “passes” Core1’s request. Platform rejects Core0’s request since it is a request for Cluster0 but Core1 is still awake. |

Core0: PowerDown Core1: PowerDown Cluster0: PowerDown |

Core0: Running Core1: Running Cluster0: Running |

6 |

Core1’s request is observed by the platform. Platform rejects Core1’s request since it is a request for Cluster0 but Core0 is still awake. |

Core0: PowerDown Core1: PowerDown Cluster0: PowerDown |

Core0: Running Core1: Running Cluster0: Running |

7 |

OS resumes on Core0 |

Core0: Running Core1: PowerDown Cluster0: Running |

Core0: Running Core1: Running Cluster0: Running |

8 |

OS resumes on Core1 |

Core0: Running Core1: Running Cluster0: Running |

Core0: Running Core1: Running Cluster0: Running |

As before, once control is returned to the OS, it can handle as it sees fit - likely just re-requesting the idle state on both cores.

8.4.3.3. _LPI (Low Power Idle States)

_LPI is an optional object that provides a method to describe Low Power Idle states that defines the local power states for each node in a hierarchical processor topology. The OSPM uses the _LPI object to select a local power state for each level of processor hierarchy in the system. These local state selections are then used to produce a composite power state request that is presented to the platform by the OSPM.

This object may be used inside a Processor Container or a processor declaration. _LPI takes the following format:

Arguments:

None

Return Value:

A variable-length Package containing the local power states for the parent Processor or Processor Container device as described in the table below. _LPI evaluation returns the following format:

Package {

Revision, // Integer (WORD)

LevelID, // Integer (QWORD)

Count, // Integer (WORD)

LPI[1], // Package

...

LPI[N] // Package

}

Element |

Object Type |

Description |

|---|---|---|

Revision |

Integer (WORD) |

The revision number of the _LPI object. Current revision is 0. |

LevelID |

Integer (QWORD) |

A platform defined number that identifies the level of hierarchy of the processor node to which the LPI states apply. This is used in composition of IDs for OS Initiated states described in Entry Method and Composition. In a platform that only supports platform coordinated mode, this field must be 0. |

Count |

Integer (WORD) |

The count of following LPI packages. |

LPI[1] |

Package |

A Package containing the definition of LPI state 1. |

LPI[N] |

Package |

A Package containing the definition of LPI state N. |

Each LPI sub-Package contains the elements described below:

Package() {

Min Residency, // Integer (DWORD)

Worst case wakeup latency, // Integer (DWORD)

Flags, // Integer (DWORD)

Arch. Context Lost Flags, // Integer (DWORD)

Residency Counter Frequency, // Integer (DWORD)

Enabled Parent State, // Integer (DWORD)

Entry Method, // Buffer (ResourceDescriptor) or

// Integer (QWORD)

Residency Counter Register // Buffer (ResourceDescriptor)

Usage Counter Register // Buffer (ResourceDescriptor)

State Name // String (ASCIIZ)

}

Element |

Object Type |

Description |

|---|---|---|

Min Residency |

Integer (DWORD) |

Minimum Residency - time in microseconds after which a state becomes more energy efficient than any shallower state. See Power, Minimum Residency, and Worst Case Wakeup Latency. |

Worst case wakeup latency |

Integer (DWORD) |

Worst case time in microseconds from a wake interrupt being asserted to the return to a running state of the owning hierarchy node (processor or processor container). See Power, Minimum Residency, and Worst Case Wakeup Latency. |

Flags |

Integer (DWORD) |

Valid flags are described in Flags for LPI states. |

Arch. Context Lost Flags |

Integer (DWORD) |

Architecture specific context loss flags. These flags may be used by a processor architecture to indicate processor context that may be lost by the power state and must be handled by OSPM. See Architecture Specific Context Loss Flags for more details. |

Residency Counter Frequency |

Integer (DWORD) |

Residency counter frequency in cycles-per-second (Hz). Value 0 indicates that counter runs at an architectural-specifi c frequency. Valid only if a Residency Counter Register is defined. |

Enabled Parent State |

Integer (DWORD) |

Every shallower power state in the parent is also enabled. 0 implies that no local idle states may be entered at the parent node. |

Entry Method |

Buffer or Integer (QWORD) |

This may contain a resource descriptor or an integer. A Resource Descriptor with a single Register() descriptor may be used to describe the register that must be read in order to enter the power state. Alternatively, an integer may be provided in which case the integer would be used in composing the final Register Value that must be used to enter this state. This composition process is described below in Entry Method and Composition. |

Residency Counter Register |

Buffer |

Optional residency counter register which provides the amount of time the owning hierarchy node has been in this local power state. The time is provided in a frequency denoted by the Residency counter frequency field (see above). The register is optional. If the platform does not support it, then the following NULL register descriptor should be used: ResourceTemplate() {Register {(SystemMemory, 0, 0, 0, 0)}} . |

Usage Counter Register |

Buffer |

Optional register that provides the number of times the owning hierarchy node has been in this local power state. If the platform does not support this register, then the following NULL register descriptor should be used:

ResourceTemplate() {Register {(SystemMemory, 0, 0, 0, 0)}}

|

State Name |

String (ASCIIZ) |

String containing a human-readable identifier of this LPI state. This element is optional and an empty string (a null character) should be used if this is not supported. |

Element |

Bits |

Description |

|---|---|---|

Enabled |

0 |

1 if the power state is enabled for use | 0 if the power state is disabled |

It is not required that all processors or processor containers include _LPI objects. However, if a processor container includes an _LPI object, then all children processors or processor containers must have _LPI objects.

The following sections describe the more complex properties of LPI in more detail, as well as rules governing wakeup for LPI states.

8.4.3.3.1. Disabling a State

When a local state is disabled by clearing the Enabled bit in the Flags field, any deeper states for that node are not renumbered. This allows other properties which rely on indexing into the state list for that node (Enabled Parent State for example) to not change.

Disabled states should not be requested by the OS and values returned by Residency/Usage Counter Registers are undefined.

8.4.3.3.2. Enabled Parent State

As mentioned above, LPI represent local states, which must be combined into a composite state. However not every combination is possible. Consider the example system described in Power states for processor hierarchy. In this system it would not be possible to simultaneously select clock gating as local state for Core0 and power down as local state for Cluster0. As Core0 is physically in Cluster0, power gating the cluster would imply power gating the core. The correct combinations of local states for this example system are described in Valid Local State Combinations in preceding example system. LPI states support enumeration of the correct combinations through the Enabled Parent State (EPS) property.

LPI States are 1-indexed. Much like C and S states, LPI0 is considered to be a running state. For a given LPI, the EPS is a 1-based index into the processor containers’ _LPI states. The index points at the deepest local power state of the parent processor that the given LPI state enables. Every shallower power state in the parent is also enabled. Taking the system described in Fig. 8.7, the states and EPS value for the states is described in Table 8.14 below.

Category / Bit Value |

State |

Enabled Parent State |

|---|---|---|

System Level ProcessorContainer LPI States |

||

0 |

Running |

N/A |

1 |

Power Down |

0 |

Cluster Level ProcessorContainer LPI States |

||

0 |

Running |

N/A |

1 |

Clock Gating |

0 – System must be running if cluster is clock gated |

2 |

Retention |

0 – System must be running if cluster is in retention |

3 |

Power Down |

1 – System may be in power down if cluster is in power down |

Core Level ProcessorContainer LPI States |

||

0 |

Running |

N/A |

1 |

Clock Gating |

1 – Cluster may be clock gated or running of core is clock gated |

2 |

Retention |

2 – Cluster may running, or clock gated, or in retention if core is in retention |

3 |

Power Down |

3 – All states at cluster level are supported if the core is powered down |

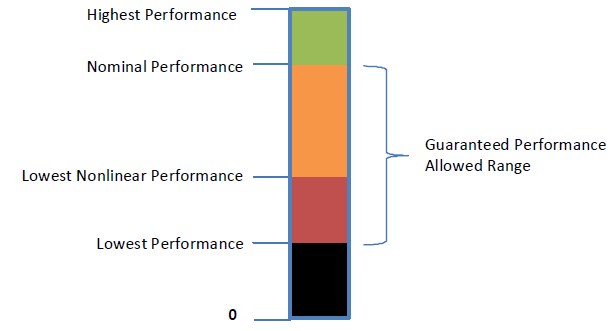

8.4.3.3.3. Power, Minimum Residency, and Worst Case Wakeup Latency

Power is not included in _LPI since relative power of different states (along with minimum residency to comprehend transition energy), and not absolute power, drive OSPM idle state decisions. To correctly convey relative power, local states in _LPI must be declared in power consumption order. That is, the local states for a particular hierarchy node must be listed from highest power (shallowest) to lowest power (deepest).

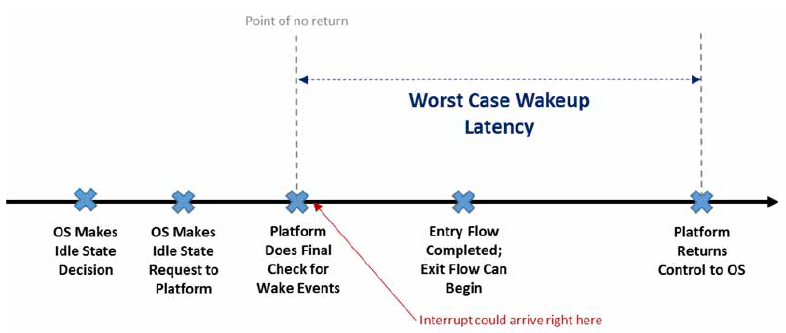

The worst case wakeup latency (WCWL) for a particular local state is the longest time from when a wake interrupt is asserted, to when the hierarchy node can return to execution. Generally, the WCWL will be the idle state’s exit latency plus some portion of its entry latency. How much of the entry flow is included depends on where (and if) the platform supports checking for pending wake events and aborting the idle state entry. For any given power state there will be a “point of no return” after which the entry into the power state cannot be reversed. This is illustrated in Worst case wake latency below. The WCWL must include the time period from the point of no return to the time at which a wake up interrupt can be handled.

Fig. 8.8 Worst case wake latency

Note that other worst case paths could end up determining the WCWL, but what is described above is expected to be the most common. For example, there could be another period between the OS making the idle request and the point of no return where the platform does not check for wake up events, and which is longer than the time taken to enter and exit the power state. In that case that period would become the worst case wakeup latency.

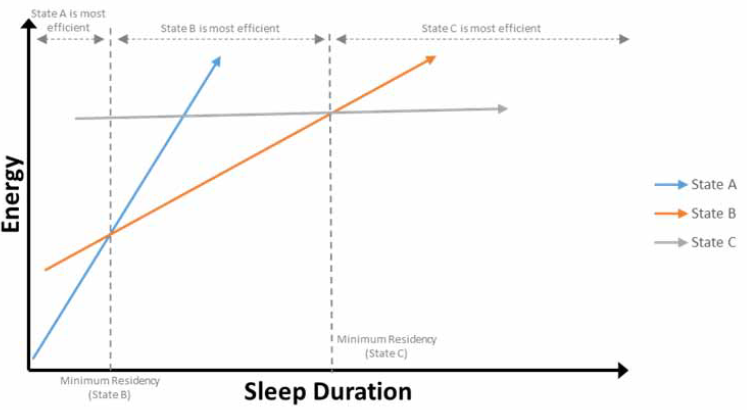

Fig. 8.9 Energy of states A,B and C versus sleep duration

Minimum residency (MR) is the time after which a state becomes more energy efficient than any shallower state. This parameter answers the fundamental question: how long does the hierarchy node need to stay in the idle state to overcome the energy cost of transitioning in/out, and make choosing that state a net win relative to shallower alternatives? Note that this also includes comparing against not entering an idle state and keeping the node running. This is illustrated in Energy of states A,B and C versus sleep duration, which shows the energy associated with three different state choices as a function of the sleep duration. Note that State A’s MR relative to keeping the node running is not pictured.

Generally, minimum residency and worst case wakeup latency will be larger for deeper states, however this may not always be the case. Taking a different example to the above, consider two system level states, StateY and StateZ, with similar entry overhead but where StateZ saves more power than StateY. An abstract state list might look like:

StateX: MR = 100 us

StateY: MR = 1000 us

StateZ: MR = 800 us, power resource A must be OFF

From an energy perspective, StateZ is always preferred, but in this example, StateZ is only available when certain device dependencies are met. This makes StateY attractive when the dependencies cannot be met. Despite being the deeper (lower power) state, StateZ has a lower MR than StateY since the entry overheads are similar and StateZ’s lower power more quickly amortizes the transition cost. Although the crossover, which sets MR, should generally be versus the next shallowest state, MR is defined relative to any shallower (higher power) state to deal with cases like this. In this case, StateZ’s MR is set by the crossover with StateX since StateZ (if allowed based on device dependencies) is always preferred to StateY. To achieve the lowest energy, OSPM must select the deepest (lowest power) state for which all entry constraints are satisfied and should not assume that deeper states are not viable just because a shallower state’s WCWL/MR threshold was not met.

Since WCWL may be used by OSPM to restrict idle state selection and guarantee response times to critical interrupts, it should be set conservatively (erring on the high side) so that OSPM is not surprised with worse than specified interrupt response time. On the other hand, MR helps OSPM make efficient decisions. If MR is inaccurate in a certain scenario and OSPM chooses a state which is deeper or shallower than optimal for a particular idle period, there may be some wasted energy but the system will not be functionally broken. This is not to say that MR doesn’t matter -energy efficiency is important - just that the platform may choose to optimize MR based on the typical case rather than the worst case.

8.4.3.3.3.1. Minimum Residency and Worst Case Wakeup Latency Combination Across Hierarchy Levels

The WCWL in _LPI is for a particular local state. When evaluating composite state choices versus system latency tolerance as part of idle state selection, OSPM will add wakeup latencies across hierarchy levels. For example, if a system has core powerdown with WCWL = 50 us and cluster powerdown with WCWL = 20 us then the core powerdown + cluster powerdown composite state latency is calculated as 70 us.

MRs defined in _LPI apply to a particular hierarchy node. The implicit assumption is that each hierarchy node represents an independent power manageable domain and can be considered separately. For example, assume that a cluster retention state is legal if the underlying cores are in core powerdown or core retention. The MR for cluster retention is based on the energy cost of taking shared logic outside of the cores in and out of retention versus the steady state power savings achieved in that shared logic while in that state. The key is that the specific state chosen at the core level does not fundamentally affect the cluster level decision since it is tied to properties of shared logic outside the core. The energy cost of entering/exiting the cluster state and the power savings it provides are independent of whether the core is in retention or powerdown. Based on this, MRs are considered independent per level in ACPI. That is, when comparing MR for different states to expected sleep duration for a particular node, OSPM uses the MRs defined in that node’s _LPI as is with no adjustment based on states at lower levels of hierarchy (though of course the state must be legal based on the lower level state’s Enabled Parent State property).

8.4.3.3.3.2. Known Limitations with Minimum Residency and Worst Case Wakeup Latency

Note that the WCWL and MR parameters are not perfect. For example, they do not scale with frequency, voltage, temperature, and various other factors which may affect them. Nor are the rules for how they combine across levels perfect. For example, cluster level MRs may move slightly based on core state choice since the entry latency of the core state will delay entry into the cluster state, derating the expected sleep duration. The cluster level MR can be adjusted to comprehend this, but if multiple core level states with different entry latencies enable the same cluster state, then its MR cannot perfectly comprehend them all. With that said, this set of parameters and combination scheme is believed to strike a good balance between simplicity/usability and accuracy.

8.4.3.3.4. Entry Method and Composition

The OSPM combines Local LPI states to create an overall composite power state. Each LPI state provides an entry method field. These fields, for the selected local power states, are combined to create the entry method register that must be read in order to enter a given composite power state.

To derive the appropriate register address from the local states’ entry methods, the following approach is used:

Local states for Processors always declare a register based entry method. This provides a base register.

Higher levels may use an integer or a register. If an Integer is used, then its value must be added to the base register obtained in step 1. If a register is used, then this becomes the new base register, overriding any previous value. Note that in this case, the selected LPI must imply specific local LPI selections for all lower level nodes.

In OS Initiated mode it is also necessary for the OSPM to tell the platform on which hierarchy level the calling processor is the last to go idle. This is done by adding the Level ID property of the hierarchy node’s LPI to the base register.

The basic composition algorithm for entry state is shown in the pseudo-code below for a platform coordinated system:

Reg = SelectedLocalState(CurrentProcessor).EntryMethod

WCWL = SelectedLocalState(CurrentProcessor).WCWL

MR = SelectedLocalState(CurrentProcessor).MR

for level = Parent(CurrentProcessor) to system

LocalState = SelectedLocalState(level)

If LocalState == Run

break

EM = LocalState.EntryMethod

WCWL = WCWL+ LocalState.WCWL

MR = LocalState.MR

If IsInteger(EM)

Reg.Addr = Reg.Addr+ZeroExtend(EM)

Else

// Entry method here overrides any previous method

Reg = EM

CompositeState.EntryMethod = Reg

CompositeState.WCWL=WCWL

CompositeState.MR=MR

In OS Initiated mode it is also necessary for the OSPM to tell the platform on which hierarchy level the calling processor is the last to go idle and request a power state. To do this, the algorithm above is modified as follows:

Reg = SelectedLocalState(CurrentProcessor).EntryMethod

WCWL = SelectedLocalState(CurrentProcessor).WCWL

MR = SelectedLocalState(CurrentProcessor).MR

RegDecided = False

// Retrieve Level Index from Processor's \_LPI object

LastLevel = GetLevelIDOfLevel(CurrentProcessor)

for level = Parent(CurrentProcessor) to system

LocalState = SelectedLocalState(level)

If LocalState == Run

break

EM = LocalState.EntryMethod

WCWL = WCWL+ LocalState.WCWL

EM = LocalState.EntryMethod

If IsInteger(EM)

Reg.Addr = Reg.Addr+ZeroExtend(EM)

Else

// Entry method is register

Reg = EM

If IsProcessorLastInLevel(CurrentProcessor,level)

// If calling processor is last one to go idle in

// current level, retrieve Level Index from

// the container's \_LPI object

LastLevel = GetLevelIDOfLevel(level)

Reg.Addr = Reg.Addr+LastLevel

CompositeState.EntryMethod = Reg

CompositeState.WCWL=WCWL

CompositeState.MR=MR